In the ever-evolving landscape of the banking and finance industry, the prevalence of Excel’s pivot tables is nothing short of astonishing. Yet, amidst this apparent chaos, a remarkable order emerges. Data consistency remains a steadfast hallmark, even when accessibility to the underlying CSV files may seem out of reach.

As organisations contemplate the transition to the Modern Data Stack, apprehension can loom large. Python, and more specifically, the versatile Pandas library, offer the power of automation to overcome these challenges. Today, we embark on a journey to unveil the secrets of extracting a grid of pivot tables from Excel, each destined for its own CSV file. With a touch of syntax and strategic value adjustments, these transformed datasets can seamlessly find their home in AWS Lambda.

This journey will empower you with the tools and knowledge to bridge the gap between Excel’s structured data and the modern cloud-driven data solutions, ultimately propelling your financial endeavours into the digital age.

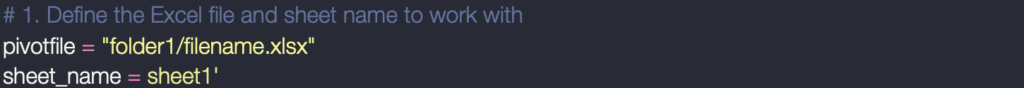

Step 1: Define the Pivot Table File and Sheet

In this crucial initial step, we explicitly define the file path and the specific sheet name where our pivot table data is located. By assigning these details to variables, we enhance the code’s readability and maintainability. This practice ensures that anyone returning to the code, including the original coder, can readily identify the exact source of the data. Consequently, this approach promotes code transparency and reduces the risk of errors or misunderstandings when working with data sources. This level of explicitness is fundamental in data engineering, where precise data handling is paramount.

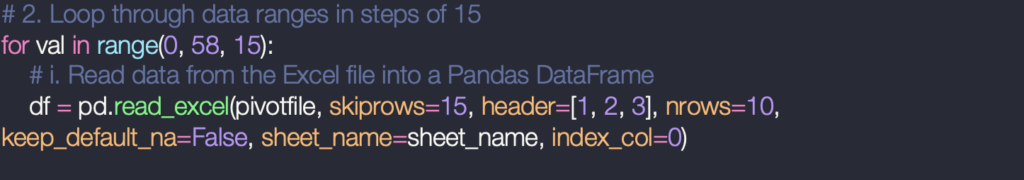

Step 2: Loop Through Data Ranges & Read Excel Data into Pandas DataFrame

In the following step, we initiate a pivotal loop that enables us to navigate through designated data ranges systematically. This looping mechanism is particularly advantageous when dealing with extensive datasets. The values for this loop are meticulously chosen based on the vertical space that separates each pivot table and the incremental step required to traverse from one table to the next.

Within the confines of this loop, we harness the versatile Pandas library to ingest data from our Excel source. This phase demands precise configuration, and that’s precisely what we achieve here. We judiciously define a set of parameters to ensure that the data extraction process aligns with our needs:

1. Skip Rows: We specify the number of initial rows to bypass during data ingestion. This is essential when dealing with Excel files that have header information or metadata at the beginning.

2. Multi-Level Headers: We establish a structured hierarchy for the column headers by specifying multiple levels (in this case, three levels). This ensures that our DataFrame retains the intricate organization of the data.

3. Row Limit: To control the amount of data we load into memory, we set a limit on the number of rows to be read. This feature is invaluable when dealing with extensive datasets where loading everything at once might not be feasible.

4. Index Column: We designate a column to serve as the index for our DataFrame, which helps in quick and efficient data retrieval and manipulation.

This level of meticulous parameterization, carried out in tandem with the iterative loop, guarantees that we efficiently and systematically extract the data we require from Excel, regardless of the dataset’s size or complexity. It showcases the power of Pandas in data handling and the importance of thoughtful configuration when working with diverse data sources.

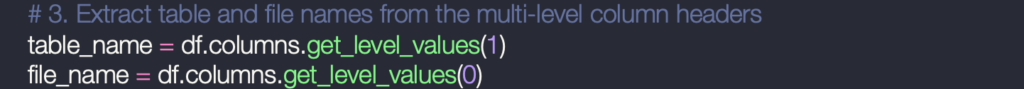

Step 3: Data Column and Table Name Extraction

In this step, we delve into the intricacies of our DataFrame’s multi-indexed column headers. As you rightly noted, our headers are structured with multiple levels, as defined with the `header=[1,2,3]` parameter earlier. These multi-level headers are a powerful way to organize and categorize the data within the DataFrame.

Now, let’s explore how we extract meaningful information from these headers:

1. Table Names Extraction: To extract the table names, we rely on the `get_level_values(1)` method. This method effectively reaches into the second level of the column headers and retrieves the distinct names associated with the tables in our data. Each unique table name is invaluable for maintaining clarity and structure in our dataset.

2. File Names Extraction: In a similar vein, we use the `get_level_values(0)` method to extract the file names. This method allows us to access the top level of the multi-indexed headers, where file names are stored. By doing so, we gain access to information about the specific source file for each section of the data.As you rightly pointed out, these extractions serve a vital purpose: they provide us with the essential metadata necessary for creating meaningful CSV filenames. The use of multi-indexed headers not only keeps our data organized but also facilitates the systematic extraction of relevant information, ultimately contributing to the clarity and comprehensibility of the data engineering process. This demonstrates the power and versatility of Pandas in handling complex data structures with ease and precision.

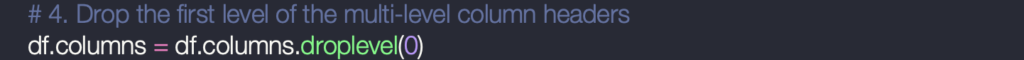

Step 4: Remove Multi-Level Header

To simplify the Data Frame, we remove one level of the multi-level column header. We will want to flatten this table into a single index and columns table to be useable in a data warehouse and to model on top of this. Therefore, we would like to set these multi-indexes as columns.

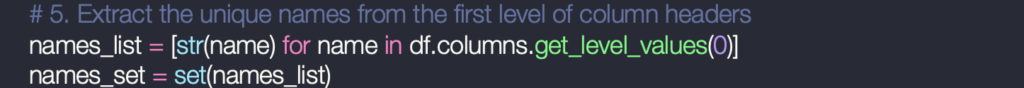

Step 5: Extract and Filter Data

We collect unique names from the first level of the DataFrame’s columns. This is useful for filtering the pivot tables and splitting into their own sections.

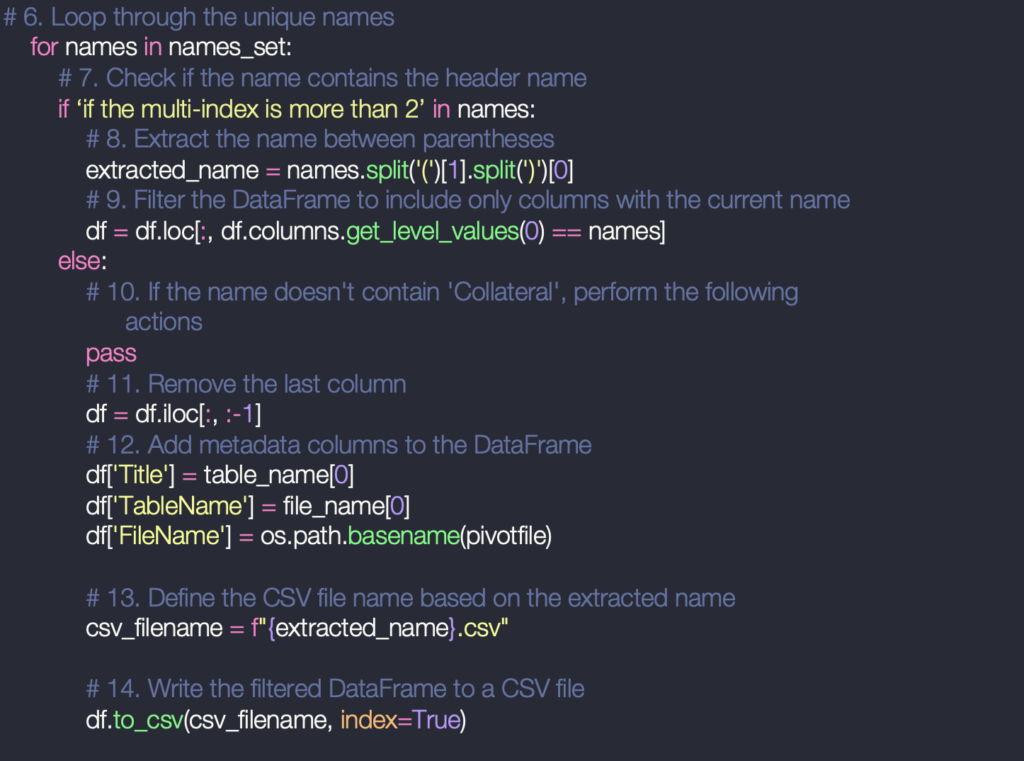

Step 6: Data Filtering and CSV File Creation

In this culminating step, we undertake the crucial task of data filtering and DataFrame preparation before the data is ultimately saved as CSV files. Let’s delve into this process in detail:

1. Data Filtering Based on ‘Multi-index’: One of the pivotal actions in this step involves filtering the data based on a specific condition. Here, we focus on the presence of the term ‘Multi-index’ within the column names. This condition acts as a filter, allowing us to extract only the sections of the data that are relevant to ‘Multi-index’.’

– The extracted data is further refined to include only columns with the specified name. This precision ensures that we capture the exact data elements we require for our analysis.

2. Metadata Addition: Beyond filtering, we enrich the DataFrame with metadata that bestows context and meaning to our data. Three critical pieces of metadata are introduced:

– ‘Title’: This metadata identifies the overall title or purpose of the data section. It adds clarity to what the data represents in the broader context.

– ‘TableName’: This metadata indicates the specific table or section from which the data is derived. It provides insight into the data’s origin.

– ‘FileName’: This metadata discloses the name of the source file (Excel file) from which the data originates. It ensures traceability and data source transparency.

3. CSV File Creation and Saving:

– With the DataFrame well-prepared, we embark on the final step of saving the data as CSV files. The filenames are crafted based on the ‘extracted_name,’ which is obtained during the filtering process. This approach guarantees that the saved CSV files bear meaningful and relevant names.

– The ‘to_csv’ method is employed to write the filtered and enriched DataFrame to a CSV file, ensuring that the data is stored in a structured and accessible format for future analysis and use.

In summary, this final step is where the data transformation process culminates, and our meticulously processed data is captured in a form that is both structured and enriched with essential metadata. This approach not only facilitates data analysis but also ensures transparency and traceability, vital aspects of effective data engineering and management.

Our journey today takes us into the realm of extracting pivot tables from Excel. These tables, often nestled within multi-level headers, hold valuable insights. With a deft touch of Python syntax and strategic data adjustments, we’ll unveil the secrets of isolating these pivot tables and converting them into individual CSV files. The result? Data that is primed and ready for integration into AWS Lambda or other modern data solutions.

This journey isn’t just about data extraction; it’s about empowerment. By the end of this adventure, you’ll wield the tools and knowledge to bridge the gap between Excel’s structured data and the boundless potential of cloud-driven data solutions. Whether you’re in banking, finance, or any industry that relies on data, this journey promises to elevate your financial endeavours into the digital age, where data is a strategic asset waiting to be harnessed.